ChatGPT And Child Safety: Exploring Its Potential To Detect Acute Distress

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

ChatGPT and Child Safety: Exploring its Potential to Detect Acute Distress

Introduction: The rise of AI chatbots like ChatGPT has sparked numerous discussions, ranging from its educational applications to concerns about misuse. However, a lesser-explored area is its potential for safeguarding children. Could this advanced technology be used to detect signs of acute distress in children, potentially preventing tragic outcomes? This article delves into the possibilities and challenges of using AI like ChatGPT to identify and respond to children's cries for help.

The Promise of AI in Child Protection:

Traditional methods of child protection often rely on reactive measures, responding to reported incidents rather than proactively identifying at-risk children. ChatGPT, with its advanced natural language processing capabilities, offers a potential proactive solution. By analyzing text-based communication – such as online chats, emails, or even social media posts – the AI could potentially identify patterns and keywords indicative of acute distress, such as suicidal ideation, self-harm, abuse, or neglect.

- Early Warning System: Imagine a scenario where a child confides in a chatbot about their struggles, revealing signs of depression or abuse. ChatGPT could be programmed to identify these red flags and alert human authorities, potentially saving a life.

- Accessibility and Anonymity: For children hesitant to confide in adults, an AI chatbot could offer a safe and anonymous space to express their feelings without fear of judgment or reprisal. This anonymity is crucial, especially in cases of domestic abuse or bullying.

- Data Analysis and Pattern Recognition: The AI's ability to analyze vast amounts of data allows it to identify subtle patterns and indicators that might be missed by human observers. This could be particularly useful in detecting subtle signs of distress that might otherwise go unnoticed.

Challenges and Ethical Considerations:

While the potential benefits are significant, several challenges and ethical considerations must be addressed before widespread implementation:

- Accuracy and False Positives: The risk of false positives is a major concern. Incorrectly identifying distress could lead to unnecessary interventions and potentially damage trust. Robust algorithms and rigorous testing are essential to minimize this risk.

- Privacy and Data Security: Protecting children's privacy and ensuring the secure storage and handling of sensitive data are paramount. Stringent data protection measures are crucial to prevent misuse and unauthorized access.

- Bias and Fairness: AI algorithms are trained on data, and if that data reflects societal biases, the AI might inadvertently perpetuate these biases in its analysis. Ensuring fairness and avoiding discriminatory outcomes is a critical ethical consideration.

- The Human Element: While AI can be a valuable tool, it should not replace human interaction and professional intervention. It's crucial to develop a system where AI alerts human professionals who can then conduct a proper assessment and provide necessary support.

The Future of AI and Child Safety:

The use of AI like ChatGPT in child protection is still in its early stages. However, the potential to save lives and improve child welfare is undeniable. Further research, rigorous testing, and careful ethical consideration are needed to develop responsible and effective applications. Collaborations between AI developers, child protection agencies, and mental health professionals are crucial to navigate the complexities involved.

Call to Action: Learn more about child safety resources in your community and support organizations working to prevent child abuse and neglect. [Link to relevant child protection organization]. The responsible development and implementation of AI in child protection requires ongoing dialogue and collaboration. Let’s work together to harness the potential of AI for the betterment of children's lives.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on ChatGPT And Child Safety: Exploring Its Potential To Detect Acute Distress. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

New Restrictions On Family Reunions For Asylum Applicants

Sep 03, 2025

New Restrictions On Family Reunions For Asylum Applicants

Sep 03, 2025 -

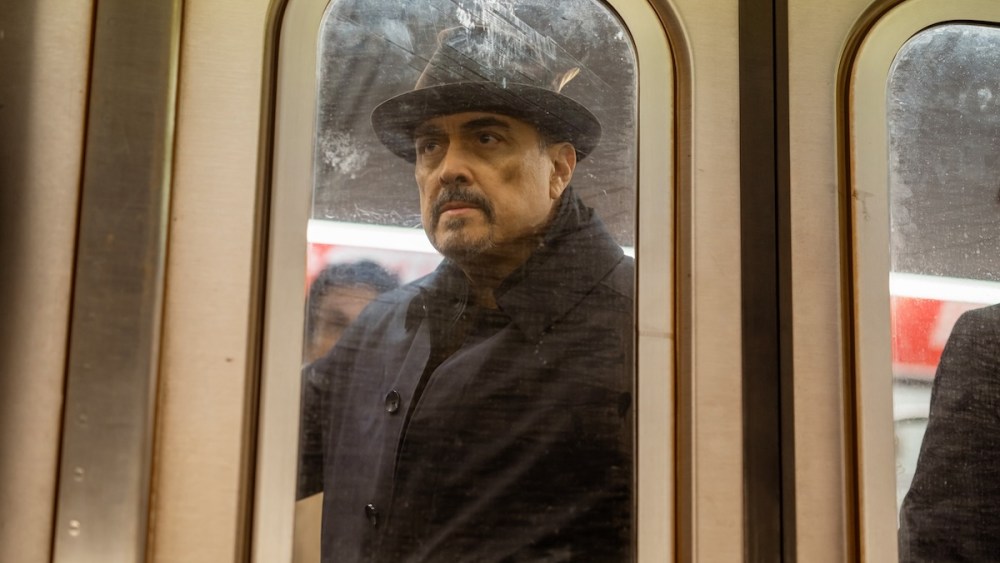

David Zayas On Dexters Resurrection A Game Changing Role And Career Highlight

Sep 03, 2025

David Zayas On Dexters Resurrection A Game Changing Role And Career Highlight

Sep 03, 2025 -

Confirmed Dele Alli Parts Ways With Como By Mutual Consent

Sep 03, 2025

Confirmed Dele Alli Parts Ways With Como By Mutual Consent

Sep 03, 2025 -

Dexters Final Act A Reassessment Of Seasons 6 8

Sep 03, 2025

Dexters Final Act A Reassessment Of Seasons 6 8

Sep 03, 2025 -

Cambodia Police Investigate Murder Of British Woman In Phnom Penh

Sep 03, 2025

Cambodia Police Investigate Murder Of British Woman In Phnom Penh

Sep 03, 2025

Latest Posts

-

Alabama Selected For Space Command Understanding The Decisions Significance

Sep 04, 2025

Alabama Selected For Space Command Understanding The Decisions Significance

Sep 04, 2025 -

Sistas Season 9 Episode 8 Where To Stream It For Free Legally

Sep 04, 2025

Sistas Season 9 Episode 8 Where To Stream It For Free Legally

Sep 04, 2025 -

Innovative Approach To Sex Offender Management Lowering Repeat Offenses

Sep 04, 2025

Innovative Approach To Sex Offender Management Lowering Repeat Offenses

Sep 04, 2025 -

Navigating A Non Fault Car Accident A Complete Guide

Sep 04, 2025

Navigating A Non Fault Car Accident A Complete Guide

Sep 04, 2025 -

Clash In Paris 2025 Reviewing Wwes Historic Event In France

Sep 04, 2025

Clash In Paris 2025 Reviewing Wwes Historic Event In France

Sep 04, 2025