ChatGPT And Child Wellbeing: Detecting Signs Of Acute Distress

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

ChatGPT and Child Wellbeing: Detecting Signs of Acute Distress

The rise of AI chatbots like ChatGPT has brought unprecedented technological advancements, but it also presents new challenges, particularly concerning child wellbeing. While offering educational and entertainment benefits, these platforms can also become unwitting facilitators of harmful content or indicators of underlying distress in children. Understanding how to detect signs of acute distress in children interacting with AI chatbots is crucial for parents, educators, and developers alike.

The Double-Edged Sword of AI Interaction

ChatGPT, and similar large language models (LLMs), provide children with access to vast amounts of information and creative tools. They can assist with homework, spark imaginative storytelling, and even offer a sense of companionship. However, this accessibility also introduces potential risks. Children might encounter inappropriate content, engage in risky online behaviors, or reveal personal information unknowingly. More concerningly, the nature of their interactions with the chatbot can reveal underlying emotional distress.

Recognizing the Warning Signs

Identifying distress in children interacting with AI chatbots requires careful observation and understanding. Several key indicators should raise parental or educator concerns:

- Unusual Changes in Behavior: Sudden shifts in mood, increased anxiety, withdrawal from social activities, or sleep disturbances following chatbot use should be investigated.

- Excessive Use: Spending an inordinate amount of time interacting with the chatbot, neglecting schoolwork, social engagements, or other responsibilities, can be a sign of underlying issues.

- Revealing Sensitive Information: Children sharing personal details, such as addresses, phone numbers, or family secrets, with the chatbot indicates a potential lack of understanding of online safety and could be a symptom of deeper problems.

- Negative or Self-Harming Language: The content of their conversations with the chatbot, if accessible, might reveal self-harm ideation, suicidal thoughts, or expressions of deep unhappiness.

- Obsessive Engagement with Specific Topics: Repeatedly asking the chatbot about disturbing or sensitive subjects suggests a preoccupation that warrants professional attention.

H2: The Role of Parental Monitoring and Education

Parental monitoring and open communication are crucial in mitigating these risks. Regularly checking children's online activity, engaging in conversations about online safety, and establishing clear rules for using AI chatbots are essential. Educating children about responsible online behavior, the importance of privacy, and the potential dangers of sharing personal information is equally vital.

H2: Technological Solutions and Future Developments

Developers are actively working on incorporating safety features into AI chatbots, including content filters and mechanisms to detect and report concerning conversations. However, these measures are not foolproof, and continuous improvement is needed. The development of AI-powered detection systems that can identify signs of child distress within chatbot interactions is an area of active research.

H2: Seeking Professional Help

If you suspect a child is experiencing acute distress related to their interaction with a chatbot like ChatGPT, seeking professional help is crucial. Contacting a child psychologist, therapist, or other mental health professional can provide the necessary support and guidance. Remember, early intervention is key. [Link to a reputable mental health resource for children].

Conclusion:

While AI chatbots offer significant opportunities for learning and engagement, their potential impact on child wellbeing requires careful consideration. By understanding the warning signs of distress, promoting responsible online behavior, and utilizing available resources, we can work towards creating a safer online environment for children interacting with AI technologies. Open communication, parental involvement, and ongoing technological advancements are vital in ensuring that the benefits of AI are realized without compromising the wellbeing of our children.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on ChatGPT And Child Wellbeing: Detecting Signs Of Acute Distress. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Solve Nyt Connections Puzzle 816 September 4th Hints And Answers

Sep 04, 2025

Solve Nyt Connections Puzzle 816 September 4th Hints And Answers

Sep 04, 2025 -

Mexican Quinceanera Goes Viral From Empty Party To Stadium Celebration

Sep 04, 2025

Mexican Quinceanera Goes Viral From Empty Party To Stadium Celebration

Sep 04, 2025 -

Googles Gemini Powered Nest Smart Speaker A Leak Reveals Key Features

Sep 04, 2025

Googles Gemini Powered Nest Smart Speaker A Leak Reveals Key Features

Sep 04, 2025 -

Woman Seriously Hurt In Two Vehicle Collision On 16th Street Sacramento

Sep 04, 2025

Woman Seriously Hurt In Two Vehicle Collision On 16th Street Sacramento

Sep 04, 2025 -

Universals 2026 Slate Loses Jordan Peeles Highly Anticipated Project

Sep 04, 2025

Universals 2026 Slate Loses Jordan Peeles Highly Anticipated Project

Sep 04, 2025

Latest Posts

-

The Ripple Effect A Distant Asian City And Russias War In Ukraine

Sep 05, 2025

The Ripple Effect A Distant Asian City And Russias War In Ukraine

Sep 05, 2025 -

Harvard Wins Judge Reverses Trump Era Research Funding Cuts

Sep 05, 2025

Harvard Wins Judge Reverses Trump Era Research Funding Cuts

Sep 05, 2025 -

Constipation In Children Parents Highlight Systemic Service Failures

Sep 05, 2025

Constipation In Children Parents Highlight Systemic Service Failures

Sep 05, 2025 -

Inadequate Care For Constipated Children A Parental Crisis

Sep 05, 2025

Inadequate Care For Constipated Children A Parental Crisis

Sep 05, 2025 -

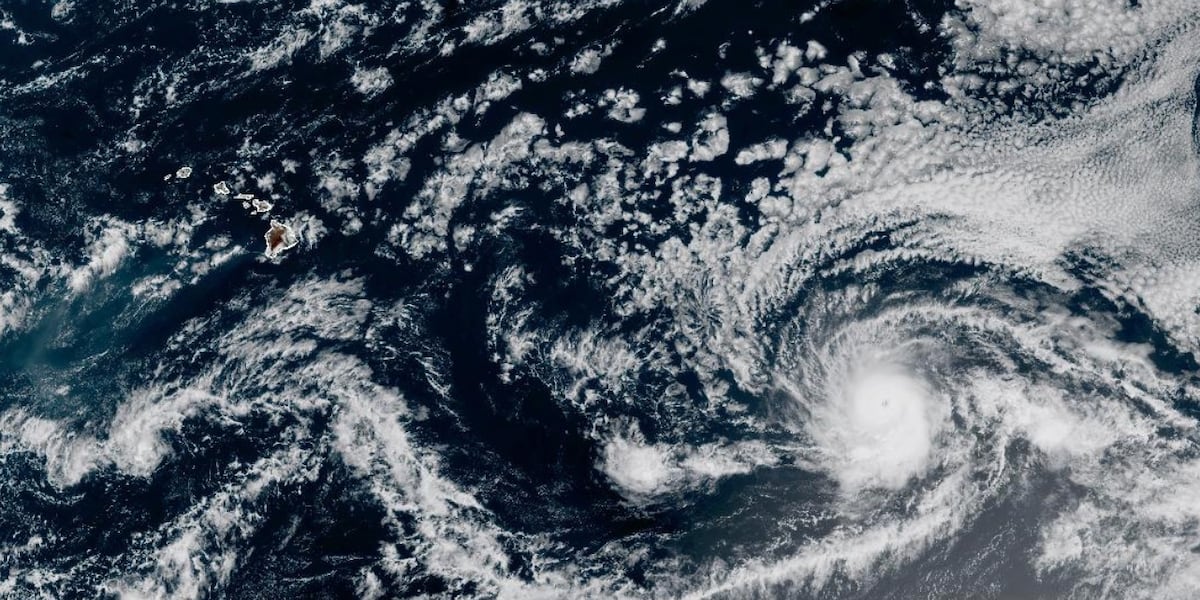

Kiko Remains A Major Hurricane Potential For Catastrophic Impacts On Coastal Regions

Sep 05, 2025

Kiko Remains A Major Hurricane Potential For Catastrophic Impacts On Coastal Regions

Sep 05, 2025