Rise Of 'AI Psychosis': Microsoft Boss Sounds The Alarm

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Rise of 'AI Psychosis': Microsoft Boss Sounds the Alarm on Emerging Mental Health Risks

The rapid advancement of artificial intelligence (AI) has ushered in an era of unprecedented technological innovation, transforming industries and daily life. But amidst the excitement, a chilling warning has emerged from an unexpected source: Microsoft CEO Satya Nadella. He's voiced concerns about the potential rise of "AI psychosis," highlighting the emerging mental health risks associated with increasingly sophisticated AI systems. This isn't just a hypothetical concern; it's a burgeoning issue demanding immediate attention and proactive solutions.

<h3>What is "AI Psychosis"?</h3>

While not a formally recognized medical condition, the term "AI psychosis" encapsulates the potential for AI to negatively impact mental well-being. This isn't about AI directly causing psychosis in the traditional sense. Instead, it refers to the psychological effects stemming from:

- Over-reliance on AI: Becoming excessively dependent on AI for decision-making, problem-solving, and even social interaction can lead to a diminished capacity for independent thought and critical thinking. This dependence can manifest as anxiety, confusion, and a decreased sense of self-efficacy.

- Information overload and misinformation: The sheer volume of information generated by AI, coupled with the spread of misinformation and deepfakes, can overwhelm individuals, leading to stress, anxiety, and even paranoia. The constant bombardment of information can erode trust and create a sense of instability.

- Job displacement and economic insecurity: The automation potential of AI is undeniable. The resulting job displacement and economic uncertainty contribute to stress, anxiety, and depression – impacting mental health on a large scale. This is a significant societal challenge requiring proactive solutions beyond just addressing the "AI psychosis" itself.

- Social isolation and decreased human interaction: As AI-powered tools become increasingly sophisticated in mimicking human interaction, there's a risk of decreased face-to-face interaction, potentially leading to loneliness and social isolation. This impacts mental well-being significantly, especially among vulnerable populations.

<h3>Nadella's Warning and the Path Forward</h3>

Nadella's comments aren't simply a call for caution; they're a call to action. He emphasizes the need for responsible AI development, highlighting the importance of ethical considerations and the mitigation of potential risks to mental health. This requires a multi-pronged approach:

- Developing ethical AI guidelines: Robust ethical frameworks are crucial to ensure AI systems are developed and deployed responsibly, prioritizing human well-being. This requires collaboration between tech companies, policymakers, and ethicists.

- Promoting media literacy and critical thinking skills: Equipping individuals with the skills to critically evaluate information and identify misinformation is crucial in navigating the complexities of the AI age. Education plays a vital role here.

- Investing in mental health resources: As AI transforms the world of work and social interaction, increased investment in mental health services is essential to support individuals coping with the potential psychological impacts.

- Fostering open dialogue and public awareness: Open conversations about the potential risks of AI are crucial to fostering understanding and promoting proactive measures.

<h3>The Future of AI and Mental Health</h3>

The rise of AI presents both incredible opportunities and significant challenges. Addressing the potential for "AI psychosis" is not about hindering technological progress; it's about ensuring that this powerful technology is harnessed responsibly and ethically, prioritizing human well-being. By proactively addressing these challenges, we can harness the transformative power of AI while mitigating its potential negative impact on our mental health. The conversation has begun, and it's crucial that it continues, involving experts, policymakers, and the public alike. The future of AI and mental health are inextricably linked, and responsible development is paramount. Learn more about the ethical considerations surrounding AI development by exploring resources from [link to relevant organization, e.g., the AI Now Institute].

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Rise Of 'AI Psychosis': Microsoft Boss Sounds The Alarm. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Al Wild Card Race Strength Of Schedule Impacts Yankees Rangers

Aug 23, 2025

Al Wild Card Race Strength Of Schedule Impacts Yankees Rangers

Aug 23, 2025 -

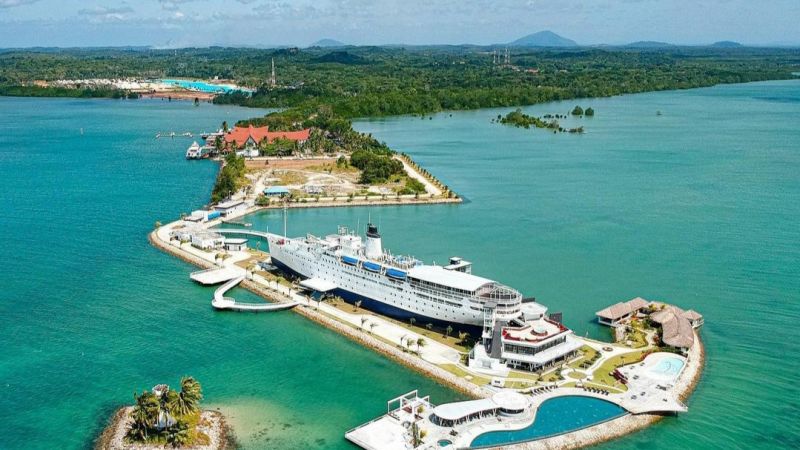

From Ocean Liner To Hotel A 18 Million Restoration

Aug 23, 2025

From Ocean Liner To Hotel A 18 Million Restoration

Aug 23, 2025 -

Behind The Scenes Look Jim Curtis Shows Support For Jennifer Aniston

Aug 23, 2025

Behind The Scenes Look Jim Curtis Shows Support For Jennifer Aniston

Aug 23, 2025 -

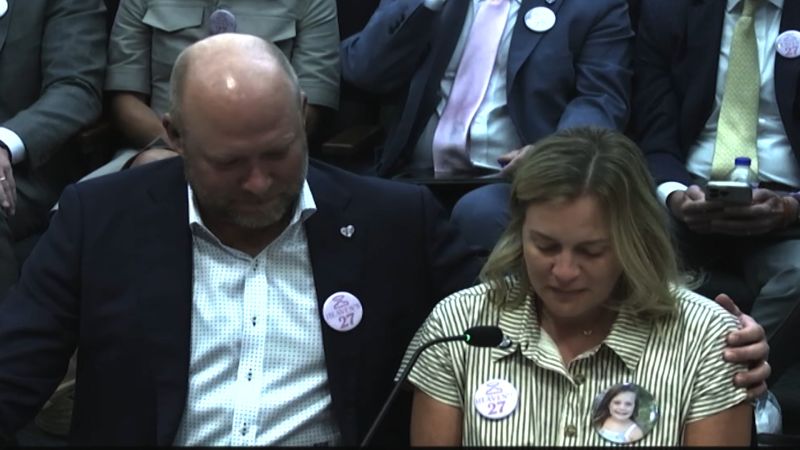

Emotional Testimony Parents Recount Camp Mystic Flood Disaster

Aug 23, 2025

Emotional Testimony Parents Recount Camp Mystic Flood Disaster

Aug 23, 2025 -

Tyquan Polks Season In Jeopardy Shoulder Surgery Confirmed

Aug 23, 2025

Tyquan Polks Season In Jeopardy Shoulder Surgery Confirmed

Aug 23, 2025

Latest Posts

-

Alcaraz And Sinners Us Open 2025 Paths A Comparative Draw Analysis

Aug 23, 2025

Alcaraz And Sinners Us Open 2025 Paths A Comparative Draw Analysis

Aug 23, 2025 -

How To Stream Or Watch The Detroit Lions Vs Houston Texans Preseason Game Live

Aug 23, 2025

How To Stream Or Watch The Detroit Lions Vs Houston Texans Preseason Game Live

Aug 23, 2025 -

Noel Clarkes Libel Case Against The Guardian Dismissed

Aug 23, 2025

Noel Clarkes Libel Case Against The Guardian Dismissed

Aug 23, 2025 -

Hawaii Rainbow Warriors Face Stanford In Season Opener National Tv Broadcast

Aug 23, 2025

Hawaii Rainbow Warriors Face Stanford In Season Opener National Tv Broadcast

Aug 23, 2025 -

Country Star Weighs In Charley Crocketts Public Backing Of Beyonce Amidst Ongoing Debate

Aug 23, 2025

Country Star Weighs In Charley Crocketts Public Backing Of Beyonce Amidst Ongoing Debate

Aug 23, 2025